MCP Servers with Code Mode: The missing piece in Agentic AI

A practical look at why MCP tool calling hits scaling limits, and how Code Mode (typed APIs + sandboxed execution) unlocks efficient multi-step agent workflows.

Dec 29, 2025

5 min read

written by Aman Kumar Nirala

I started working on an MCP SDK for Node.js (TypeScript) as an internal project at my organization around six months ago. Along the way, my team lead at Synergyboat came across Cloudflare’s idea around “Code Mode” and brought it to my attention. I found it interesting. So interesting, in fact, that we spent the past couple of months adding the feature to our own implementation.

Now that it’s done, I sit here trying to reflect on the whole process, the level of complexity that went into this, and to achieve… what exactly?

To understand where we are heading, we need to answer some fundamental questions:

We want to tell LLM agents how to use a set of APIs via a standardized definition.

Anthropic says, “Here is the structure, we call it MCP, use this…”

I believe it is.

Sadly, it did. It is over-engineered.

Think about the objective here: I want to tell LLMs how to use a function. Whether it’s an API, a CLI command, or anything else callable.

This is one of the classic problems of NLP. Consider how a developer understands how to use a function or tool. If we treat the function as a black box, all we need to know is:

And guess what? We’ve been doing this since we started organizing programs in large codebases.

--help/**

* Calculates the total price including tax.

* @param basePrice - The base price before tax

* @param taxRate - Tax rate as a decimal (e.g., 0.1 for 10%)

* @returns The total price including tax

*/

function calculateTotal(basePrice: number, taxRate: number): number {

return basePrice * (1 + taxRate);

}

That’s it. That’s all an LLM needs to understand how to use this function. We’ve had this pattern for decades.

Anthropic introduced MCP in November 2024 as an open standard to standardize how AI systems connect with external tools and data sources. The goal was noble: create a “USB-C port for AI,” a universal interface.

But here’s the question that started nagging me:

Did we really need a completely new schema that LLMs never saw in their training data?

And now we sit here discussing how JSONs are bad at hinting LLMs about meaning and context. The irony is palpable.

So what are we doing in Codemode now? What did Cloudflare propose? What did Anthropic endorse? What am I doing in my project?

Let me walk you through the process, and we can laugh together:

Or in simpler terms:

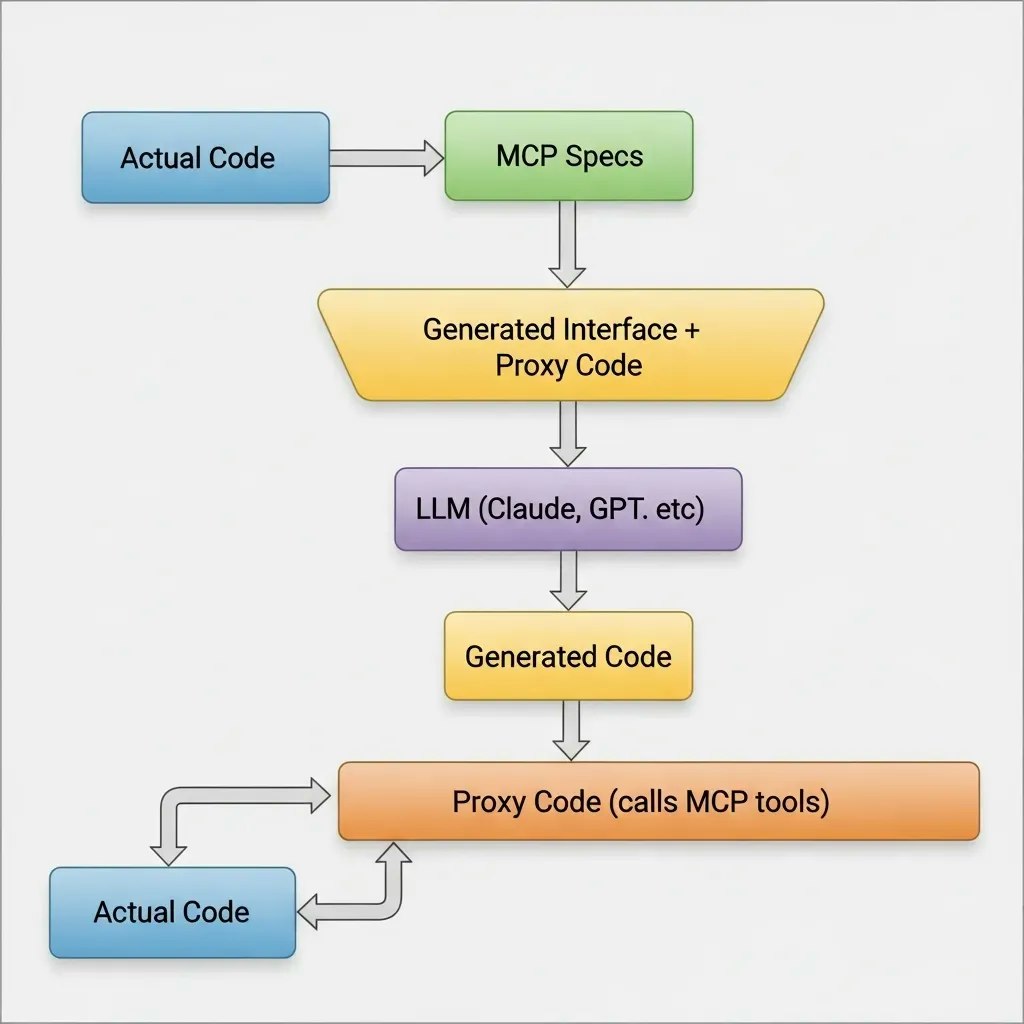

Actual Code → MCP Specs → Generated Interface + Proxy → [LLM] → Generate Code → Proxy → Actual Code

Let’s ask the original question again:

All these layers… was it all needed to achieve the task of giving the LLM three things about using a function?

We created a new JSON schema that LLMs never saw in training. Then we realized LLMs struggle with JSON for semantic understanding. So we… convert it back to TypeScript interfaces. Because LLMs are trained on massive amounts of TypeScript code and understand it better.

We went:

We circumnavigated the globe to reach our neighbor’s house.

Despite my critique, I still have codemode at the core of workflow orchestration in my SDK. Here’s why:

When you need to orchestrate complicated sequences of operations (conditional logic, loops, error handling, intermediate state management), having the LLM generate code is genuinely powerful:

// Step 1: Test basic database connectivity with a simple query

try {

const connectivityResult = await supportAssistant.dbQuery({ sql: "SELECT 1 as test_value" });

console.log("Database connectivity test result:", connectivityResult);

} catch (error) {

console.error("Database connectivity failed:", error);

return { error: "Database connectivity failed", details: error.message };

}

// Step 2: List all tables to understand database structure

try {

const tablesResult = await supportAssistant.dbListTables({});

console.log("Available tables:", tablesResult);

} catch (error) {

console.error("Failed to list tables:", error);

return { error: "Failed to list tables", details: error.message };

}

// Step 3: Examine the sessions table structure

try {

const tableInfoResult = await supportAssistant.dbGetTableInfo({ table: "sessions" });

console.log("Sessions table structure:", tableInfoResult);

} catch (error) {

console.error("Failed to get sessions table info:", error);

return { error: "Failed to get sessions table info", details: error.message };

}

// Step 4: Test a simple count query on sessions table

try {

const countResult = await supportAssistant.dbCount({ table: "sessions" });

console.log("Sessions count result:", countResult);

} catch (error) {

console.error("Failed to count sessions:", error);

return { error: "Failed to count sessions", details: error.message };

}

return {

status: "Phase 1 diagnosis completed successfully",

message: "All basic database operations are working. Ready for Phase 2."

};

This kind of complex orchestration is where codemode shines. You can’t easily express this with single tool calls.

For multi-step operations, having the LLM generate a single code block is more token-efficient than round-tripping for every tool call.

As an engineer, I’m not very happy about the design of the solution. We’ve added layers upon layers to solve a communication problem that documentation solved ages ago.

But here’s the uncomfortable truth: sometimes engineering is about navigating the constraints of the present, not designing for an ideal world.

MCP exists because:

The codemode layer exists because:

Each layer solves a real problem. The question is whether we could have designed a simpler solution from the start.

Here’s what I think could have worked better:

We already have rich, well-established formats for describing APIs and tools:

--helpThese aren’t just specifications. They’re documentation written for humans. LLMs understand human-readable text far better than they understand a new JSON schema they’ve never seen.

The standard could have been:

Direct semantic inference from existing API specs and documentation.

No new schema. No transpilation. Just feed the LLM the docs that already exist.

Instead of a monolithic protocol, imagine:

The real challenge isn’t format. It’s discovery. When an LLM has access to thousands of potential tools, how does it find the right ones?

This is where technologies like GraphRAG could shine:

Query: "I need to book a flight and send a confirmation email"

GraphRAG retrieves:

├── flights.booking.reserve (Travel Domain)

├── flights.booking.confirm (Travel Domain)

├── email.transactional.send (Communication Domain)

└── templates.confirmation.generate (Content Domain)

The LLM doesn’t need to know about all available tools — just the ones relevant to the current task, retrieved semantically.

MCP centralized the problem: "Here's one protocol, everyone conform to it."

The alternative would have been to decentralize: "Here's how to expose your existing docs in a way LLMs can consume, and here's how to make your tools discoverable."

But that ship has sailed. MCP is the de facto standard now, and we're building infrastructure around it. Perhaps what emerges next will be the semantic layer on top - a way to bridge the gap between what we have and what could have been.

Six months of building an MCP SDK taught me that the industry often converges on solutions that work despite their complexity, not because of their elegance.

We wanted to tell LLMs how to use our functions. We had the answer all along: good documentation. Instead, we built layers of abstraction, transpilers, sandboxes, and proxy systems to convert code into JSON and back into code again.

MCP and codemode solve real problems. The standardization has value. The security guarantees matter. But as engineers, we should keep asking ourselves: are we solving the problem, or are we building impressive machinery around a simpler solution?

The next time someone proposes a new protocol to let AI use your APIs, start with the simplest question:

“Have you tried just showing it the docs?”