Best Mobile App Framework 2025: Find the One That Speeds Your Launch

Discover 2025 benchmarks comparing Flutter, React Native, and Native: See which framework speeds your launch by up to 4 months while cutting long-term costs by 50%.

Aug 31, 2025

25 min read

written by Aman Kumar Nirala and Utkarsh Khanna

In this blog, we benchmark Flutter, React Native, and fully Native development (Android/iOS) using 2025-ready tooling, real-world test cases, and a scientific methodology. Our goal is not just to measure performance, responsiveness, and user experience, but also to recommend the right tool for the right context.

To make this comparison meaningful, we developed the same Flashcard Generator App using all three technologies, ensuring a consistent UI, behavior, and user experience across platforms. This approach allows us to provide practical, data-driven insights and guide you on which framework to choose and when, based on your needs and constraints.

In 2025, users demand zero lag, instant loads, and battery-friendly behavior, no matter the device. Whether you’re building the next big consumer app or optimizing a mission-critical enterprise tool:

A slow app is a failed product.

The choice of development framework directly influences app performance and long-term scalability. A beautifully designed UI that stutters or drains power won’t survive in production, no matter how good the codebase looks.

That’s why we’re putting these three frameworks through real benchmarks, not just theoretical comparisons.

Flutter takes a fundamentally different approach from traditional native rendering. Instead of relying on platform-specific UI components, Flutter renders its UI entirely through its own graphics engine. With the introduction of Impeller, Flutter’s new default renderer, it draws every frame directly to the screen using the GPU via Metal on iOS or Vulkan/OpenGL on Android.

Unlike traditional frameworks that rely heavily on the main thread for UI updates, Flutter’s architecture separates concerns across multiple threads. Impeller enhances this model by providing pre-compiled shader pipelines and a more deterministic rendering path. This helps eliminate runtime shader jank and ensures consistent, high frame-rate animations.

The result is smooth and reliable performance across platforms, especially for apps with custom UIs, rich animations, or pixel-level precision, without depending on the underlying platform’s widget set.

In theory: Flutter should be blazing fast. But does it hold up under real-world stress? Can it maintain performance without dropping frames or consuming excess memory?

React Native renders UI by bridging React components written in JavaScript or TypeScript to native platform views. Instead of drawing pixels directly like Flutter, React Native delegates rendering to the native UI toolkits, UIKit on iOS and ViewGroups on Android — ensuring platform fidelity and seamless integration with native APIs.

Modern React Native, powered by the Fabric renderer, introduces a streamlined, synchronous rendering path that leverages the JavaScript Interface (JSI). This architecture enables faster and more predictable UI updates by directly connecting JavaScript and native C++ objects without serialization. Layout calculation is handled by Yoga, a cross-platform Flexbox layout engine optimized for performance and consistency.

React Native components are represented internally as a tree of lightweight Shadow Nodes, which are reconciled and committed in batches to the native view hierarchy. This design enables precise control over rendering, layout, and updates.

Additionally, TurboModules allow native modules to be loaded on demand, reducing memory footprint and improving startup time. Combined with Concurrent React, Fabric enables advanced capabilities like prioritized rendering, smooth transitions, and fine-grained UI scheduling.

The result is a modern and efficient rendering engine that brings the flexibility of React together with high-performance native rendering, offering a robust foundation for scalable, interactive mobile applications.

But is React Native fast enough to compete with Flutter and Native on today’s devices?

Native development leverages the platform’s built-in rendering engine and system-optimized graphics stack to deliver high-performance UIs tailored for Android and iOS. On Android, Jetpack Compose renders using the Android UI toolkit, powered by RenderThread, Choreographer, and the Skia graphics engine. On iOS, SwiftUI (or UIKit) renders directly through Core Animation and Metal, the same stack Apple uses for all system UIs.

Both Jetpack Compose and SwiftUI adopt a declarative UI paradigm, where UIs are composed of reactive state-driven functions. Under the hood, they convert composable functions into efficient view trees, track state changes through recomposition or diffing, and submit batched updates directly to the native rendering pipeline.

Jetpack Compose handles layout and rendering through a series of coordinated threads:

SwiftUI operates with similar elegance, backed by a system-level rendering engine:

This architecture ensures tight integration with the OS, smooth animations, and precise control over memory, gestures, accessibility, and GPU usage. It’s especially effective for building high-performance, responsive UIs that align seamlessly with platform guidelines and system behavior.

The result is a highly optimized native render path, purpose-built for mobile performance, with direct access to every pixel, sensor, and system capability the platform has to offer.

But how much better is native in terms of real-world performance?

To make this comparison meaningful, we did not rely on opinions or anecdotes. We created a controlled benchmark methodology where each framework was tested with identical UI, behavior, workload, and sampling, ensuring consistent user flows on both iOS and Android.

All measurements were taken on real devices, with multiple iterations under repeatable conditions. The benchmarks tracked startup time, frame rendering stability, memory behavior, I/O operations, and dropped frames. This approach ensured that we were not only capturing raw speed but also the runtime behavior that defines real-world user experience.

By grounding the study in a transparent and repeatable methodology, our goal is to move past assumptions and provide practical, data-driven insights into when to choose Flutter, React Native, or Native.

Our study combined two perspectives:

This study focuses on runtime behavior rather than feature parity.

All benchmark harnesses and the Flashcard demo app are available in our Github Repository:

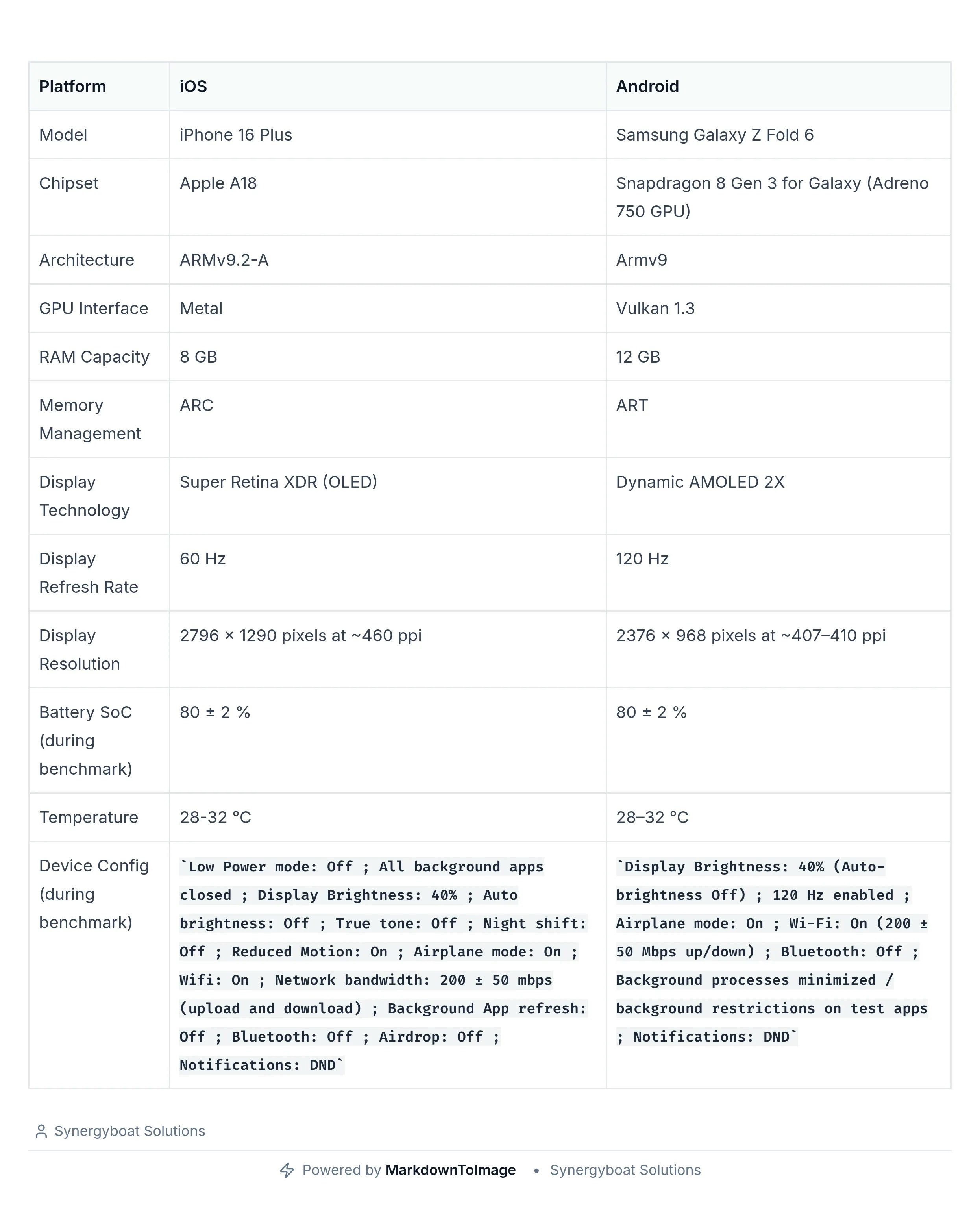

For stable results use the device configs listed below.

Disclaimer on Benchmarking Limitations:

While every effort was made to control environmental factors during testing, such as battery level, background processes, thermal state, and device configuration. It’s important to acknowledge that mobile application performance benchmarking can never be entirely free from exogenous variables. Factors like OS-level scheduling, hardware-specific optimizations, and runtime variability across platforms inevitably introduce some degree of noise. Our goal is not to claim absolute performance truths, but to present repeatable, well-controlled comparisons relative performance trends

To explore developer experience, we built the same Flashcard Generator AI app in Flutter, React Native, and Native (Swift for iOS and Kotlin for Android). The app connects with the OpenAI API to generate flashcard decks, organizes them in a clean UI, and follows a clean code architecture to keep logic, UI, and data layers separate.

This gave us a real-world way to test:

Working across all three frameworks highlighted important trade-offs. Here’s what our team learned from the experience, and how each platform stacked up in real development:

This exercise surfaced what it feels like to build and ship in each ecosystem. But developer experience is only one side of the story.

To understand how these frameworks perform under pressure, we designed a dedicated List Rendering Benchmark. This allowed us to test runtime behavior under identical conditions, removing subjective impressions and focusing on measurable outcomes.

To measure runtime performance objectively, we created a dedicated List Rendering Benchmark Screen in each framework: Flutter, React Native, Swift (iOS), and Kotlin (Android).

This benchmark was carefully designed to be consistent and reproducible across all implementations:

By isolating list rendering, we removed higher-level app variability and focused solely on rendering efficiency, giving us a clear picture of how each framework behaves under the same workload.

All benchmarks were executed on physical devices under controlled conditions to ensure consistency:

To maintain fairness across runs, the environment was carefully controlled:

To keep things fair, we tested Android on the Galaxy Z Fold 6 with its silky 120 Hz Dynamic AMOLED 2X display: the best hardware we could get our hands on, so the device itself wasn’t the bottleneck. iOS, on the other hand, ran on the iPhone 16 Plus, which still tops out at 60 Hz.

For all the number crunching, we normalized performance against each panel’s refresh target, so the comparison stays clean.

That said,

Dear Apple, maybe it’s time every $1,000+ iPhone gets 120 Hz. ProMotion shouldn’t require a “Pro” suffix, smoothness is a human right, not a product tier.

Our benchmark captured both runtime performance and build/runtime resource characteristics.

Runtime Metrics

Memory Metrics

Build & Binary Metrics (collected manually, outside runtime execution)

To ensure consistency across frameworks, the following formulas were applied when calculating benchmark metrics:

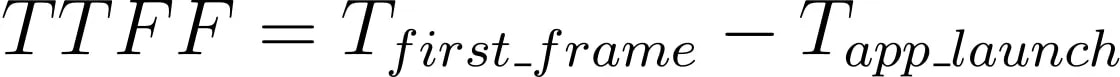

Startup Time (TTFF)

Time difference between app launch and first rendered frame.

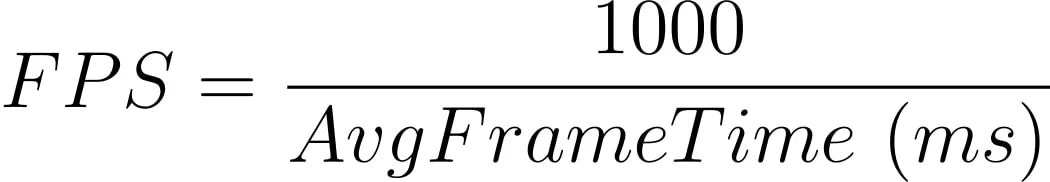

Frames Per Second (FPS)

Converts average frame time into effective frame rate.

95th Percentile Frame Time (P95): Extracted as the frame time below which 95 percent of samples fall, to capture tail latency.

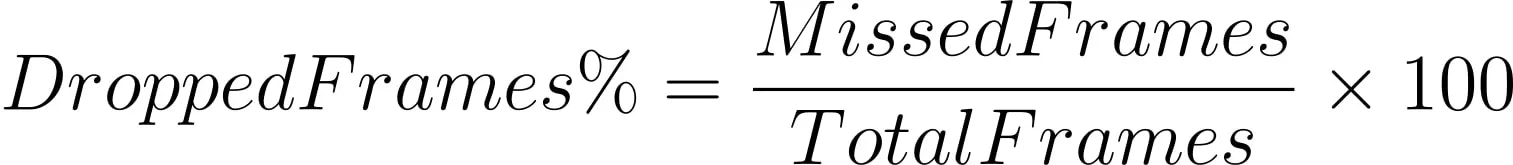

Dropped Frames %

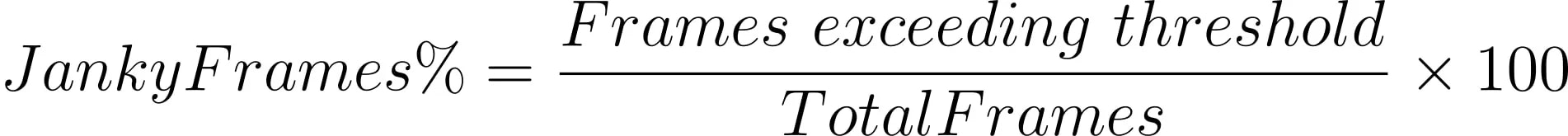

Janky Frames %

Where threshold = 16.67 ms (for 60 Hz) or 8.33 ms (for 120 Hz).

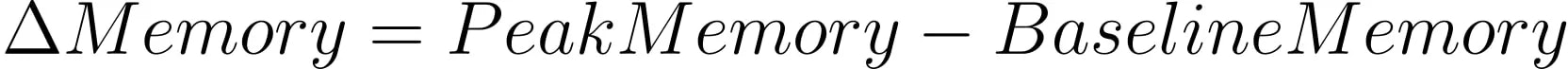

Memory Delta (MB)

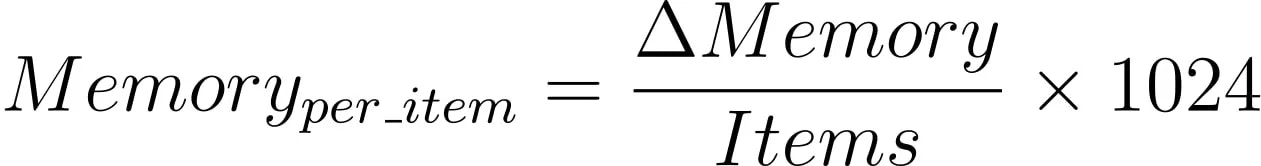

Memory per Item (KB)

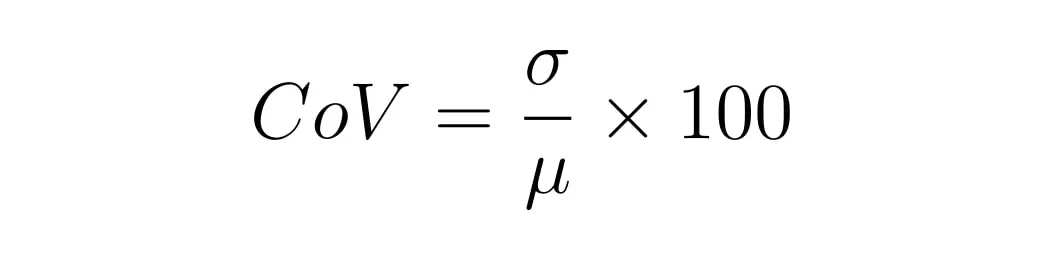

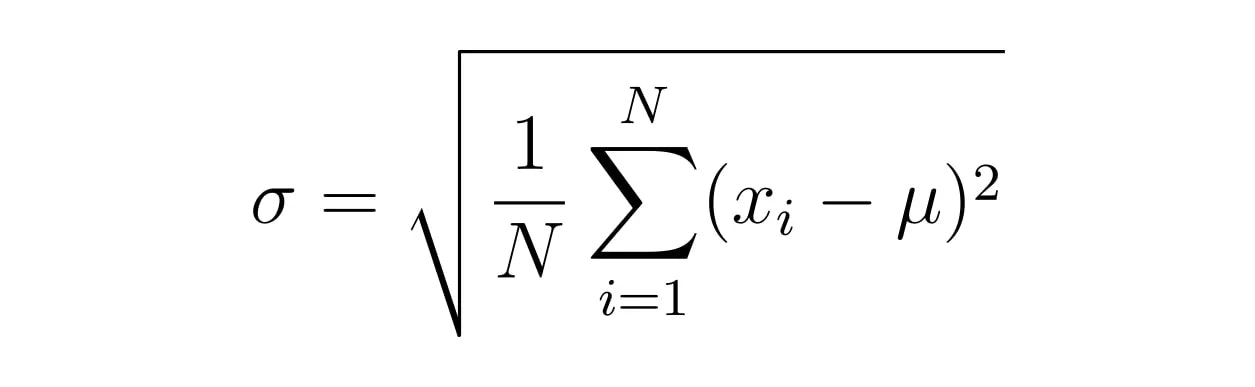

Coefficient of Variation (CoV)

Ratio of standard deviation (σ) to mean frame time (μ), indicating frame stability.

iOS Native (Swift + Obj-C)

CADisplayLinkCADisplayLink.preferredFrameRateRangetask_info(..., MACH_TASK_BASIC_INFO / TASK_VM_INFO)Android Native (Kotlin/Compose)

android.view.ChoreographerDebug.getMemoryInfo()ActivityManager.getMemoryInfo()Flutter (Dart)

SchedulerBinding.instance.addTimingsCallbackProcessInfo.currentRssdart:ioReact Native (JS + NativeModules)

NativeModules.FrameProfilerNativeModules.MemoryProfilerWith the setup locked, we move from lab notes to lap times. All charts below clearly distinguishes each display’s refresh target (16.7 ms @60 Hz, 8.3 ms @120 Hz) to reflect true, user-visible smoothness.

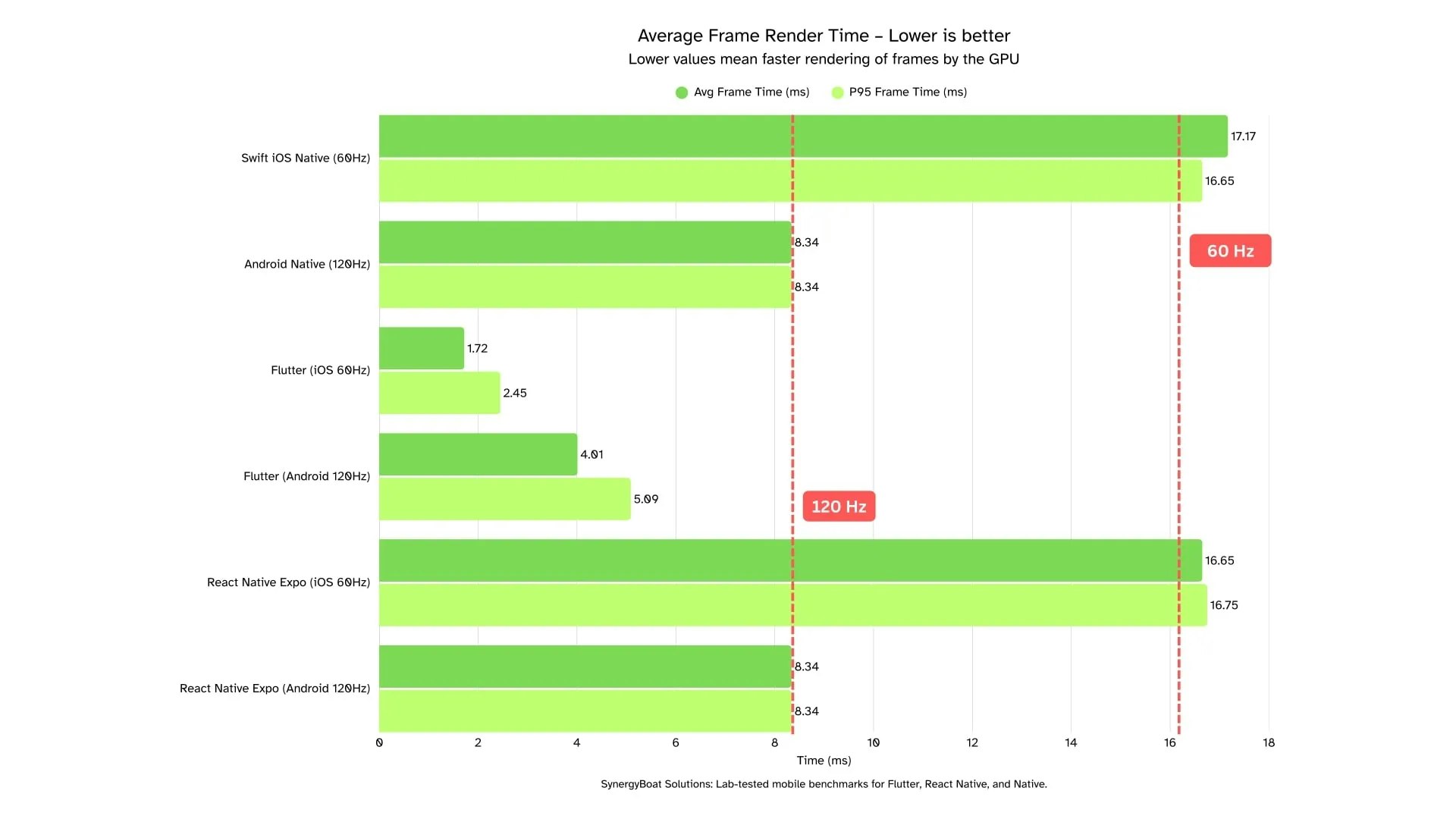

Average Frame Render Time is the mean time your app takes to produce each frame during the test run. Think of it as the “cruising speed” of your UI. Lower is better, and it directly maps to perceived smoothness. It’s a solid health check for overall rendering efficiency and a good way to spot broad regressions (layout work, heavy decoding, overdraw).

However, averages can be charmingly deceitful: they hide stutters and hitching that users actually feel. Use it alongside tail metrics (p95), DroppedFrame%, and Jank% to see the whole picture, and always read it against the frame budget (≈16.7 ms at 60 Hz, ≈8.3 ms at 120 Hz).

Practical rule of thumb: Keep your average comfortably below the budget (e.g., ≤14 ms at 60 Hz, ≤7 ms at 120 Hz) to preserve headroom for GC, I/O, and animation spikes, because smooth isn’t the average, it’s the experience.

V-Sync Note (Render Time & FPS Capping): Mobile UIs are synchronized to the display’s v-sync, so frames can only appear at fixed intervals i.e. ~16.7 ms at 60 Hz and ~8.3 ms at 120 Hz. If a frame finishes early, it simply waits for the next v-sync. Users won’t see “extra” frames. In Flutter,FrameTimingexposes engine-side build and raster durations that aren’t v-sync-capped, so you can see true rendering effort. In iOS/Android native and React Native (withCADisplayLink/Choreographer), our timestamps are v-sync-aligned, so an on-time frame may still report near the budget even if it completed earlier.

Practical reading: Judge Average Frame Render Time against the frame budget, and pay special attention to bars that cross the red line. Those are missed v-sync deadlines (jank). Sub-budget bars indicate headroom and stability, not higher visible FPS (no medals for “200 FPS on a 60 Hz screen”).

How to read this: Averages are v-sync–aware on native/RN (they cluster near the frame period), while Flutter’s engine timings reflect actual render work, so sub-budget numbers indicate headroom, not higher visible FPS.

Insights:

iOS (60 Hz, budget ≈ 16.7 ms)

Android (120 Hz, budget ≈ 8.3 ms)

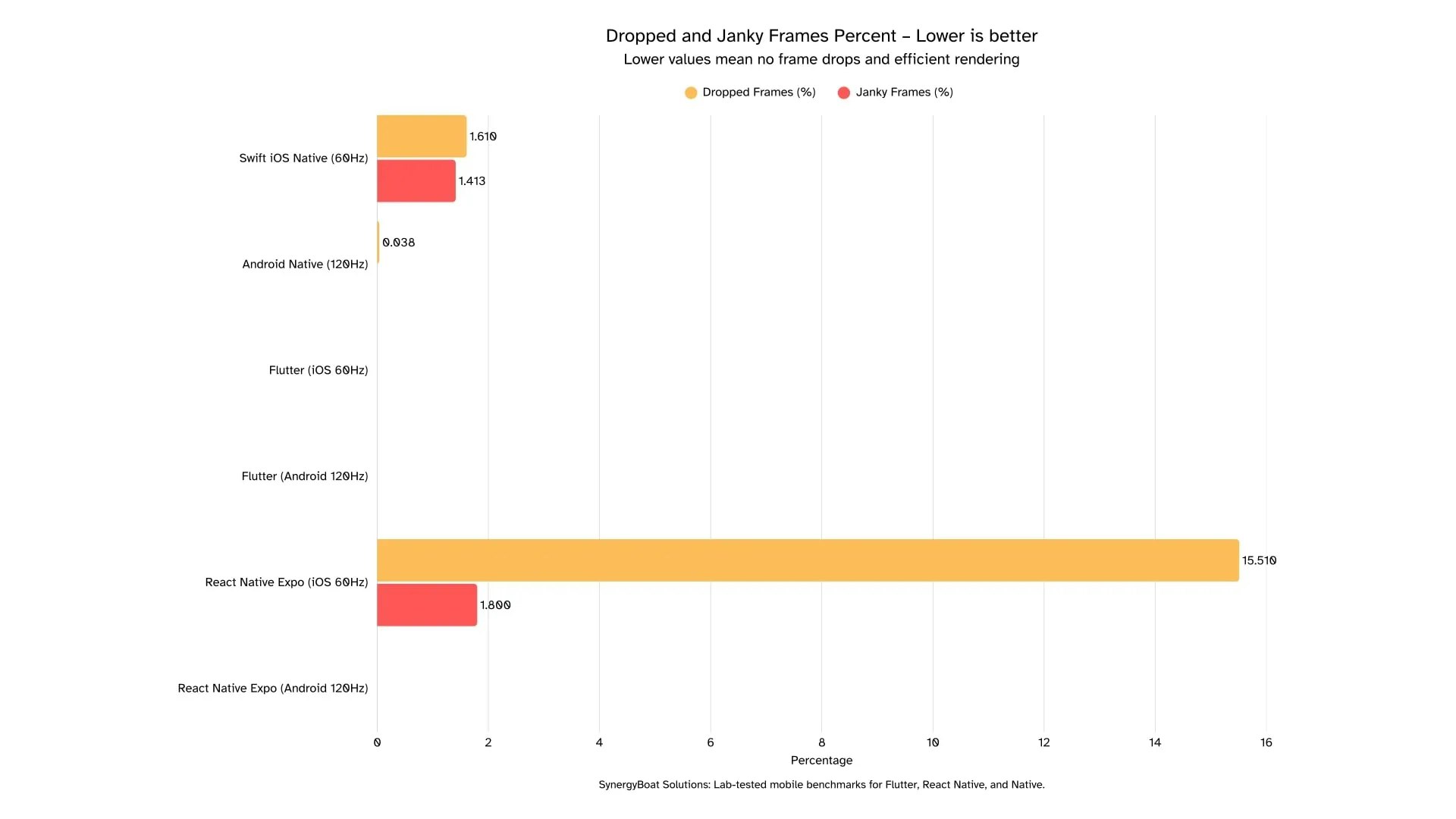

Dropped Frames Percent is the share of frames that miss their v-sync deadline entirely and are not presented on time. Janky Frames Percent is the share of frames that exceed the frame budget but still show up on the next v-sync. Lower is better for both. These metrics reveal the stutters users actually feel. Use them to catch GC spikes, image decode stalls, layout bursts, or bridge contention that a good average can hide.

However, single percentages can be charmingly deceitful. Read them alongside p95, Average Frame Render Time, and your frame budget (≈16.7 ms at 60 Hz, ≈8.3 ms at 120 Hz)

Practical rule of thumb: Keep Jank% under 1 to 2 percent and DroppedFrames% well under 1 percent for sustained scrolls. Anything above that is noticeable in production.

How to read this: DroppedFrames% captures missed presentations. Jank% captures frames that exceeded the budget. Zeroes mean the pipeline met its deadlines. Non-zero values point to contention on the UI, JS, or decode paths. Interpret these alongside p95 and Average Frame Render Time to separate steady-state smoothness from stress behavior.

Insights:

iOS (60 Hz, budget ≈ 16.7 ms)

Android (120 Hz, budget ≈ 8.3 ms)

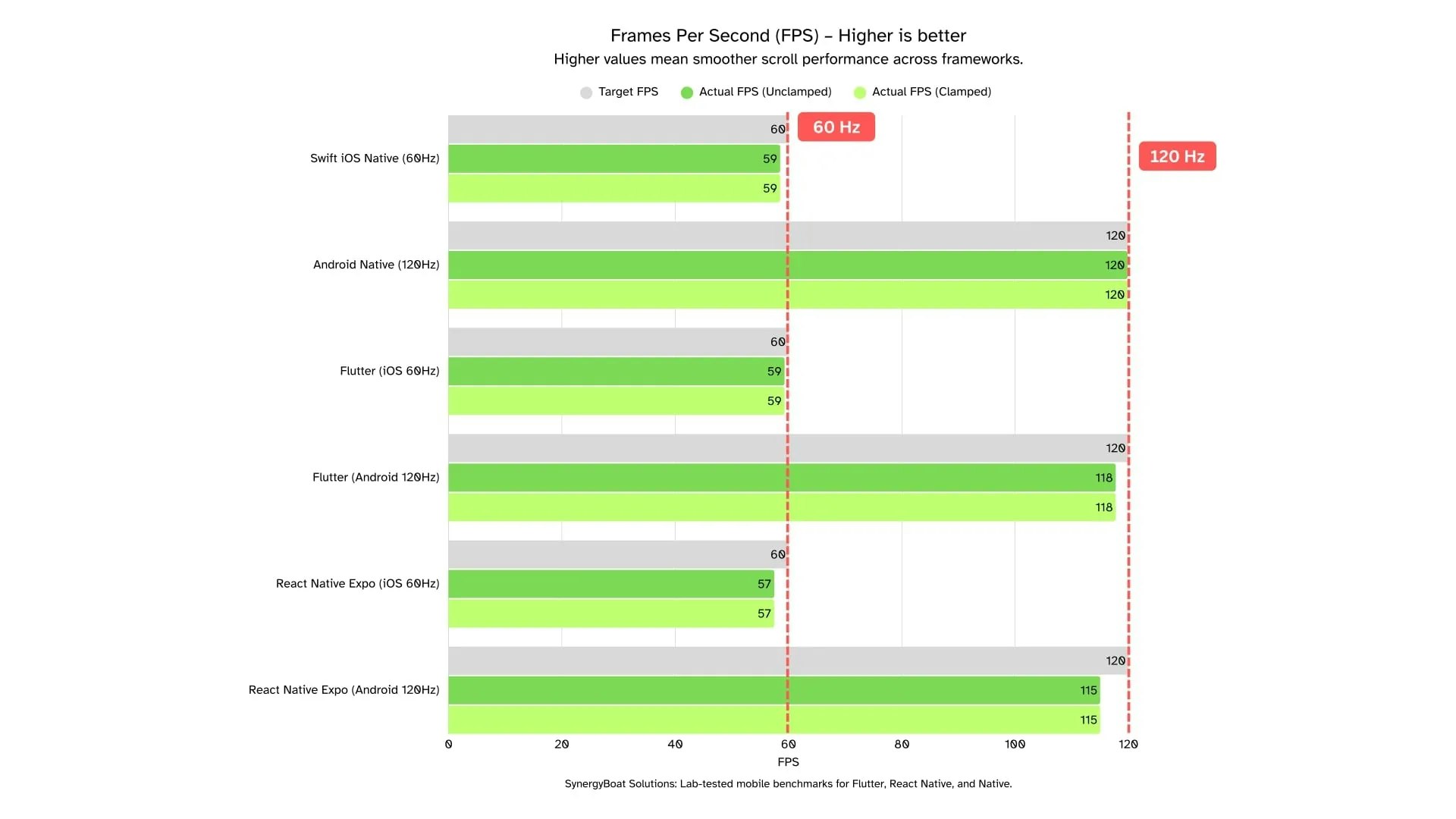

FPS is the number of frames the app actually presents per second during the test run. It is the presentation rate users see. Higher is better, up to the device’s refresh ceiling. It is a solid health check for steady-state smoothness and a quick way to spot underutilization of the frame budget.

However, it hides stutters and hitching that appear as tail events. Read FPS together with p95, Average Frame Render Time, DroppedFrames%, and Jank%, and always compare against the panel ceiling (60 or 120 Hz).

Practical rule of thumb: Aim for ≥ 98% of the panel refresh under sustained scroll when tails are also clean.

V-Sync Note (Clamped vs Unclamped): Mobile displays present frames in v-sync slots only. Because our runs stayed within the budget most of the time, Unclamped and Clamped FPS are identical here. Sub-budget frame times do not raise visible FPS beyond the panel limit. Use FPS as a presentation sanity check, and use frame time and p95 to understand real headroom.

Insights:

iOS (60 Hz, ceiling 60)

Android (120 Hz, ceiling 120)

How to read this: FPS is the visible outcome of meeting v-sync deadlines. Equality of Unclamped and Clamped confirms v-sync capping. Use FPS to confirm presentation quality, then rely on p95 and Average Frame Render Time to diagnose headroom and the real causes of any drops.

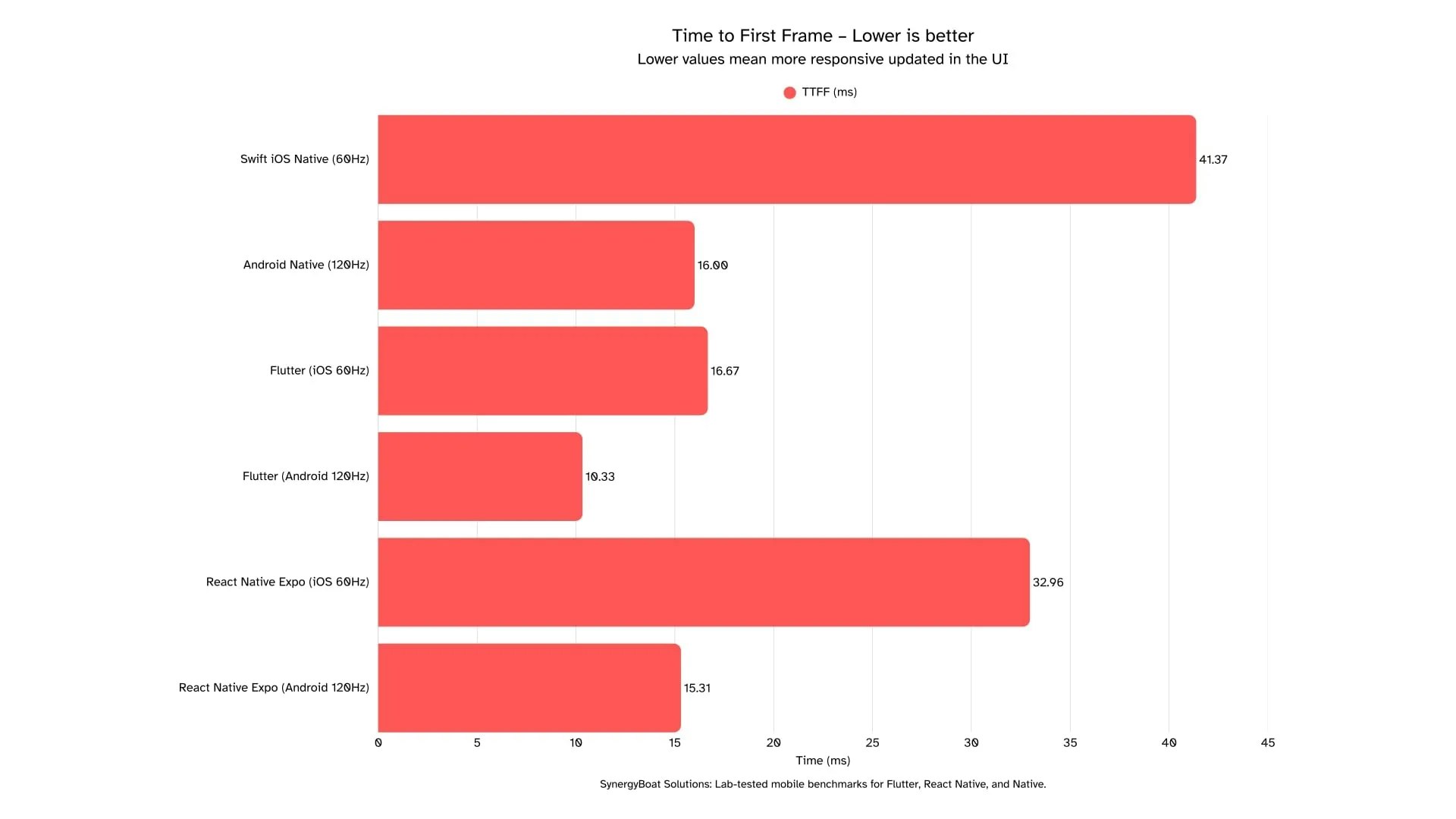

Time to First Frame (TTFF) is the time from launch to the first presented frame. Lower is better. It sets the very first impression of snappiness and is a solid health check for startup work like runtime initialization, dependency injection, and initial layout.

Look at StdDev to understand stability across runs and read TTFF alongside Average Frame Render Time, p95, DroppedFrames%, and Jank%.

Practical rule of thumb: Aim for TTFF that feels instant to the eye. Sub-50 ms reads as immediate. Keep StdDev tight so users do not occasionally see a slower first paint.

Launch Semantics Note (Cold vs Warm): TTFF depends on what is initialized before the first frame. Warm starts, cached assets, and prewarmed engines reduce TTFF. Cold starts, heavy module initialization, and synchronous I/O increase it.

Insights:

iOS (60 Hz)

Android (120 Hz)

How to read this: TTFF is your first impression metric. Use StdDev to judge if that impression is consistent. If TTFF looks great but StdDev is high, users will sometimes feel a slow start even if the average looks fine.

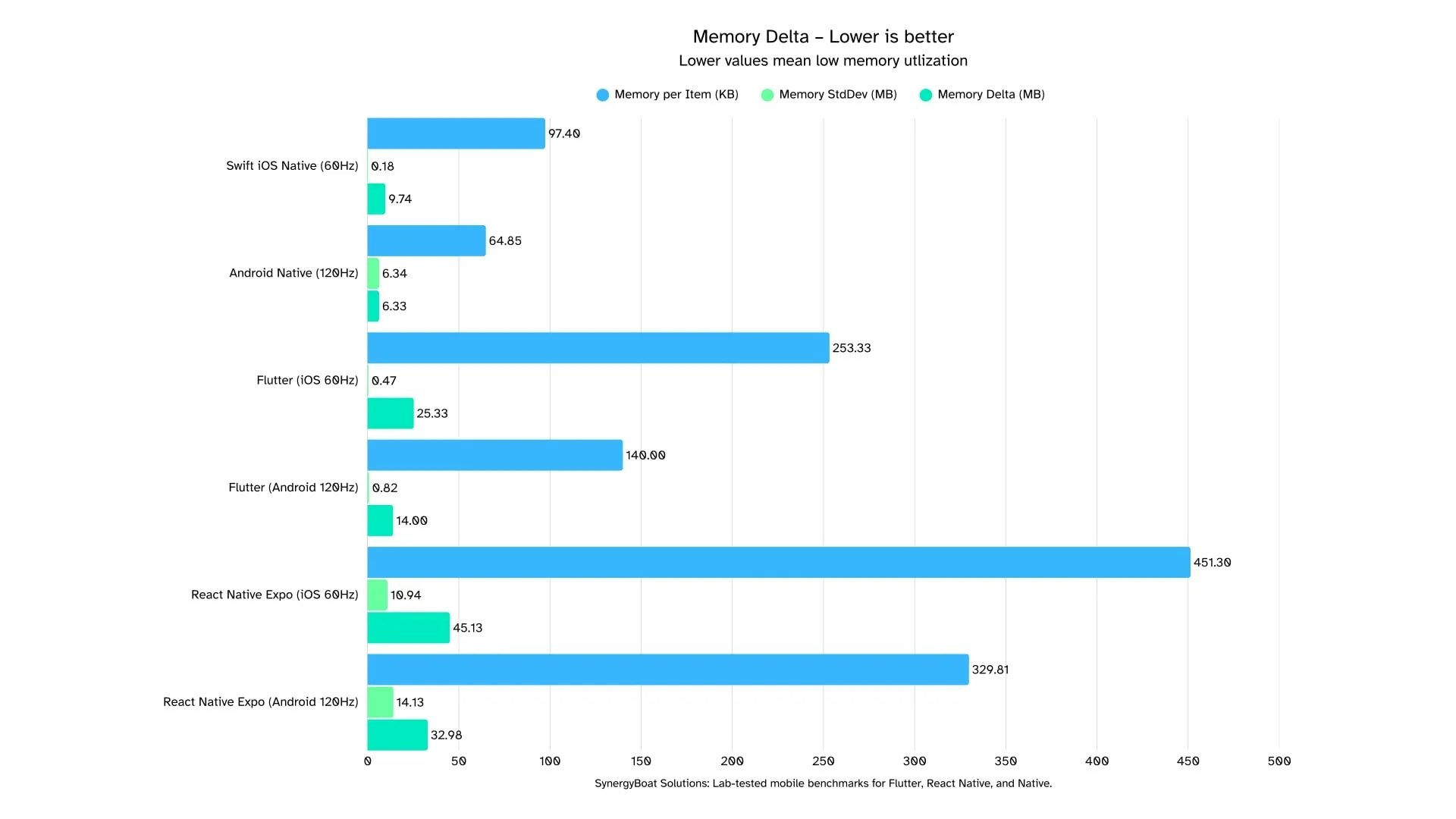

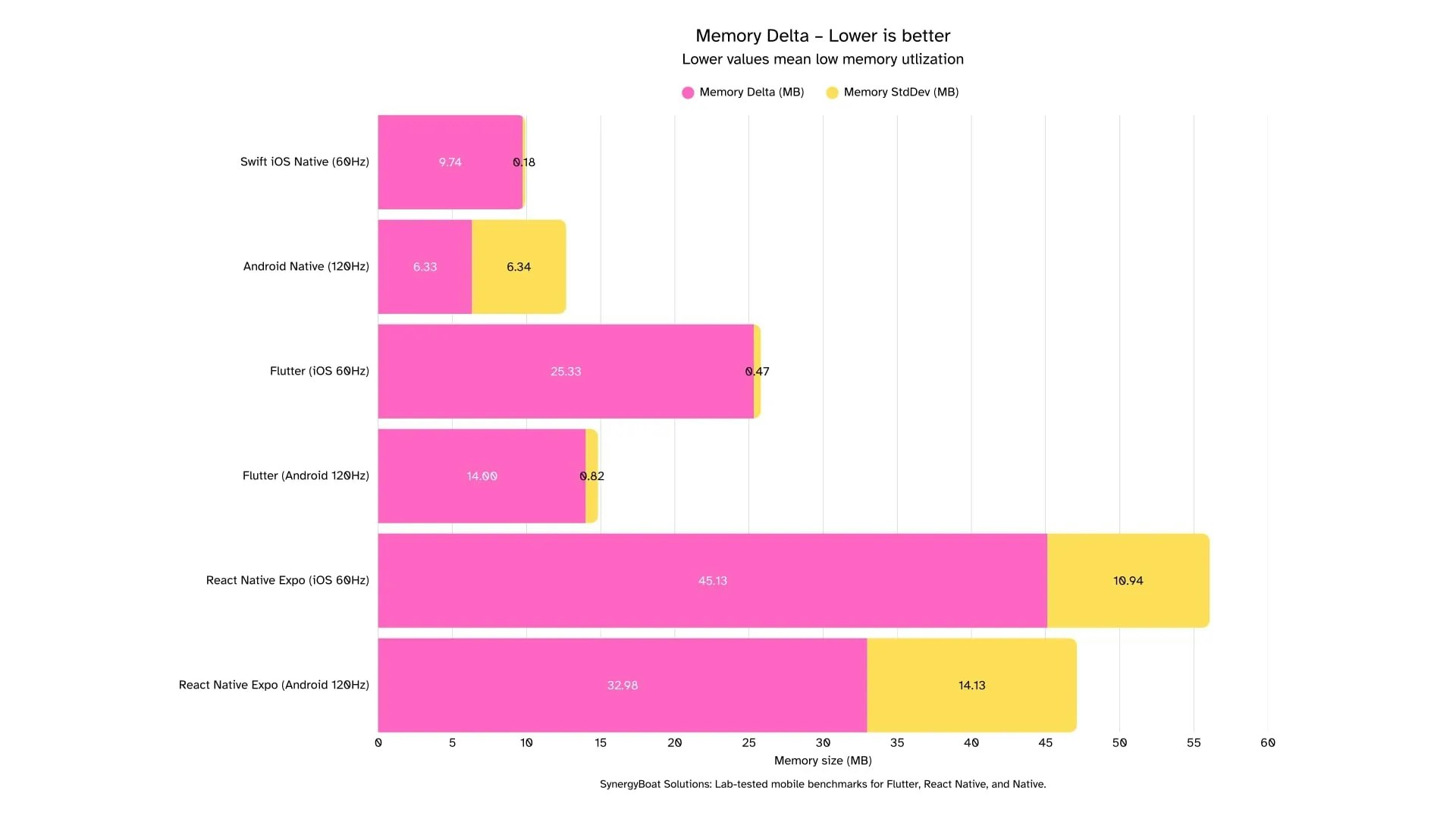

Memory Delta is the increase in process memory during the benchmarked action. Lower is better. It reflects allocations created by rendering, data structures, images, and caches that come alive while the list scrolls.

However, single deltas can be misleading. Always read StdDev to judge stability across runs, and compare within each OS because iOS and Android report memory differently.

Practical rule of thumb: Keep ΔMemory in the low teens for simple scrolls and keep StdDev small so growth is predictable.

Memory Metric Note (RSS vs PSS): iOS reports RSS and Android reports PSS. These are not directly comparable. Use within-platform deltas for fairness and call out the metric in tables so no one mixes apples with Androids.

Insights:

iOS (60 Hz)

Android (120 Hz)

How to read this: ΔMemory shows how heavy a screen becomes as it works. StdDev separates steady growth from spiky behavior. Interpret results alongside Average Frame Render Time, p95, DroppedFrames%, and Jank% to see whether memory pressure aligns with visual hitches.

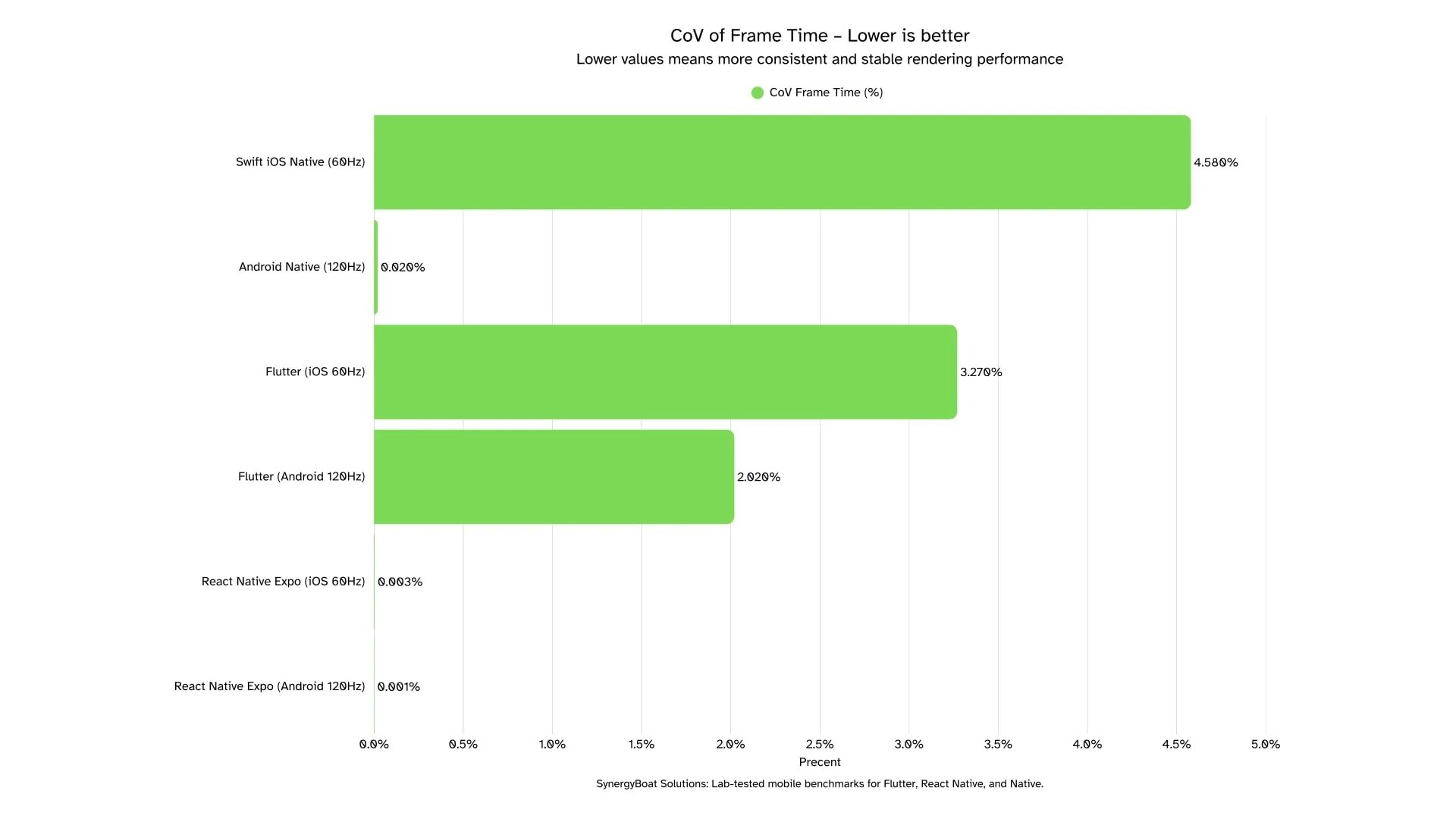

Coefficient of Variation (CoV) of Frame Time is the ratio of the standard deviation to the mean frame time, expressed in percent. Lower is better. It captures frame-to-frame stability, which maps to perceived smoothness. It is a solid health check for pacing consistency and a good way to spot micro-hitches even when averages look fine.

Read them alongside p95, Average Frame Render Time, DroppedFrames%, and Jank%, and always compare against the frame budget (≈16.7 ms at 60 Hz, ≈8.3 ms at 120 Hz).

Practical rule of thumb: Keep CoV in the low single digits for sustained scrolls. If CoV rises above 5 percent on 60 Hz or 3 percent on 120 Hz, expect visible inconsistency.

Variability Note (Quantization and sample size): V-sync quantization can compress dispersion when frames cluster near the budget, and small sample sizes can make CoV look artificially tiny. Practical reading: treat CoV as a stability lens, not a pass score. Confirm with p95 and JankFrames% and DroppedFrames%.

Insights:

iOS (60 Hz)

Android (120 Hz)

How to read this: CoV tells you how even the frame cadence is. It does not tell you whether frames meet the budget. A pipeline can have tiny CoV and still miss v-sync consistently. Interpret CoV together with p95, Average Frame Render Time, DroppedFrames%, and Jank%.

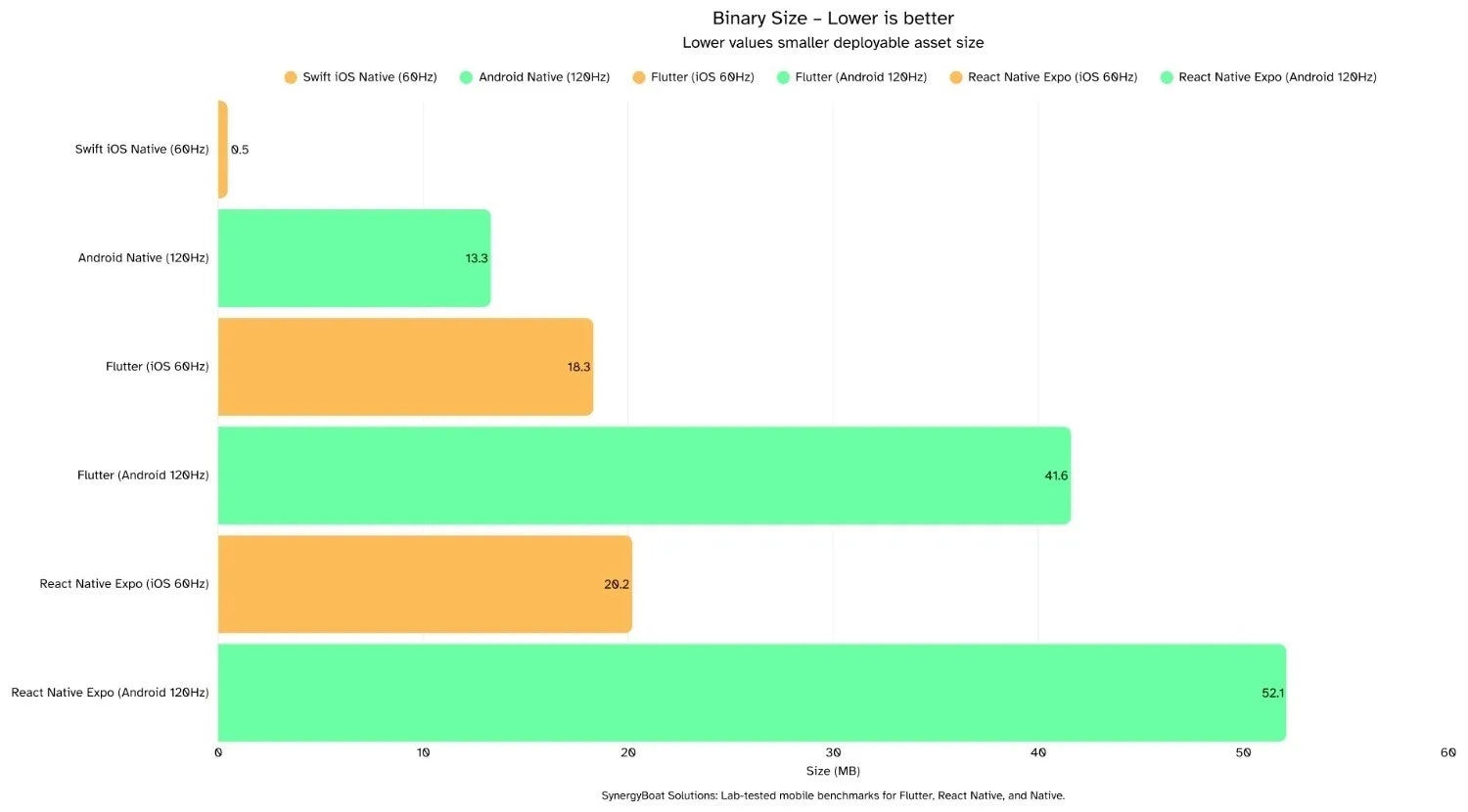

Binary Size is the size of the built app artifacts generated from the project. Lower is better. It affects store download, install time, update bandwidth, and cold start I/O due to package decompression and library loading.

However, single numbers won’t show the full picture. iOS and Android handle frameworks and splitting differently, so absolute sizes are not directly comparable across OS.

Practical rule of thumb: Keep minimal screens in low double digits on Android and sub-20 MB on iOS for cross-platform stacks. Track deltas over time. Sudden jumps usually come from large assets, extra native modules, or disabled shrinkers.

Build Artifact Note: iOS often reports the.ipaor uncompressed.appwithout dSYMs. Android may be an APK or an AAB that Play splits per ABI and density. React Native with Expo includes runtime components that increase baseline size. Flutter bundles the engine and ICU data, which raises baselines versus pure native.

Insights:

iOS

Android

How to read this: iOS native looks tiny because the OS ships most frameworks. Cross-platform stacks bundle a runtime, so baselines are higher. On Android, final user download from the store is often smaller than the raw APK due to AAB splitting. Always pair binary size with TTFF and ΔMemory to avoid trading size for runtime cost.

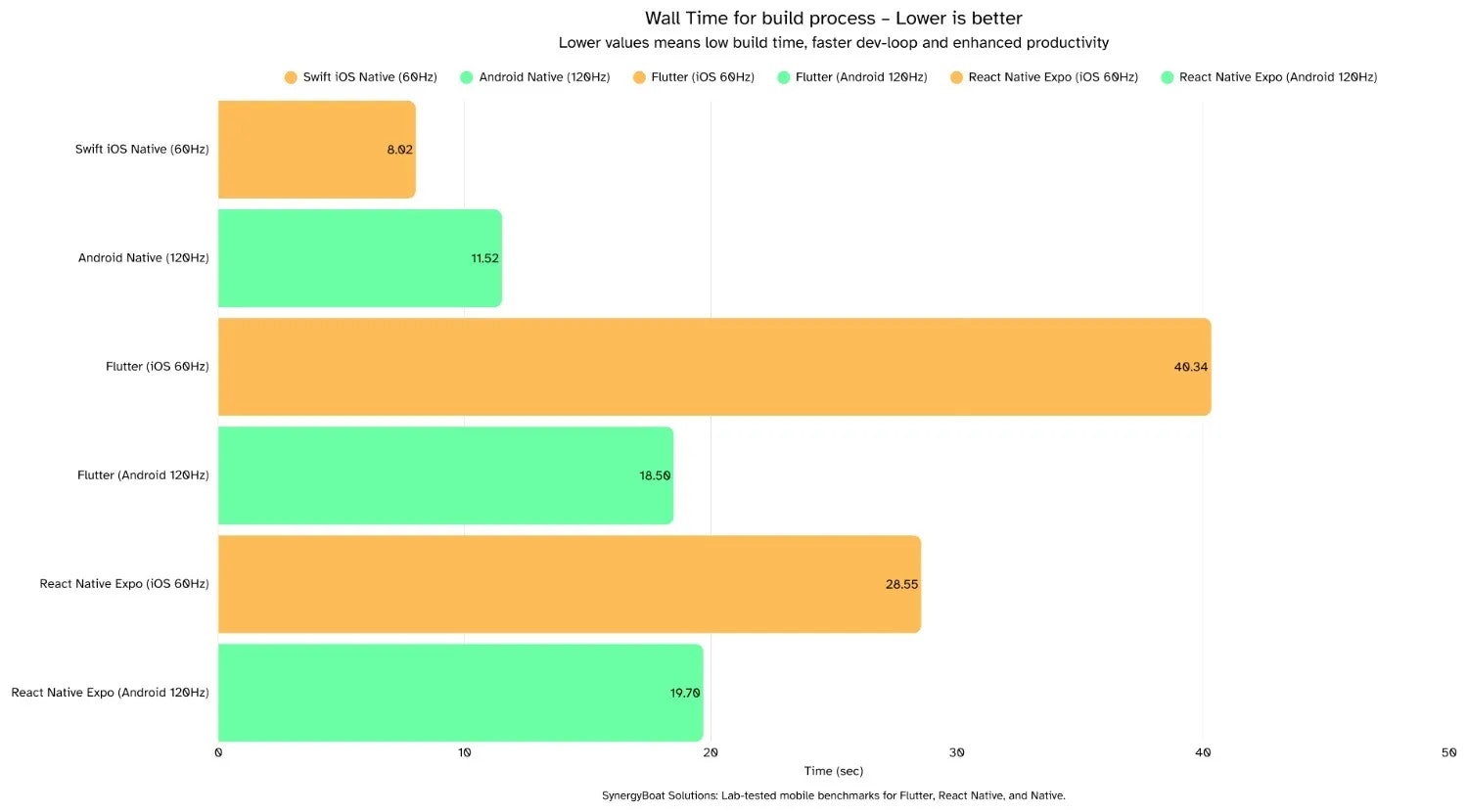

Build Time is the wall-clock time from a code change to the next runnable build on device or simulator after a complete stop. Lower is better. It directly impacts developer velocity, iteration cadence, and the number of safe experiments you can run in a day.

Always consider variability across runs and remember that tooling state, caches, and signing can skew results. Compare like for like: same machine, same project state, same build mode.

Insights:

iOS

Android

How to read this: Dev-loop time measures developer experience, not user experience. A 10 to 20 second gap translates into dozens of extra iterations per day.

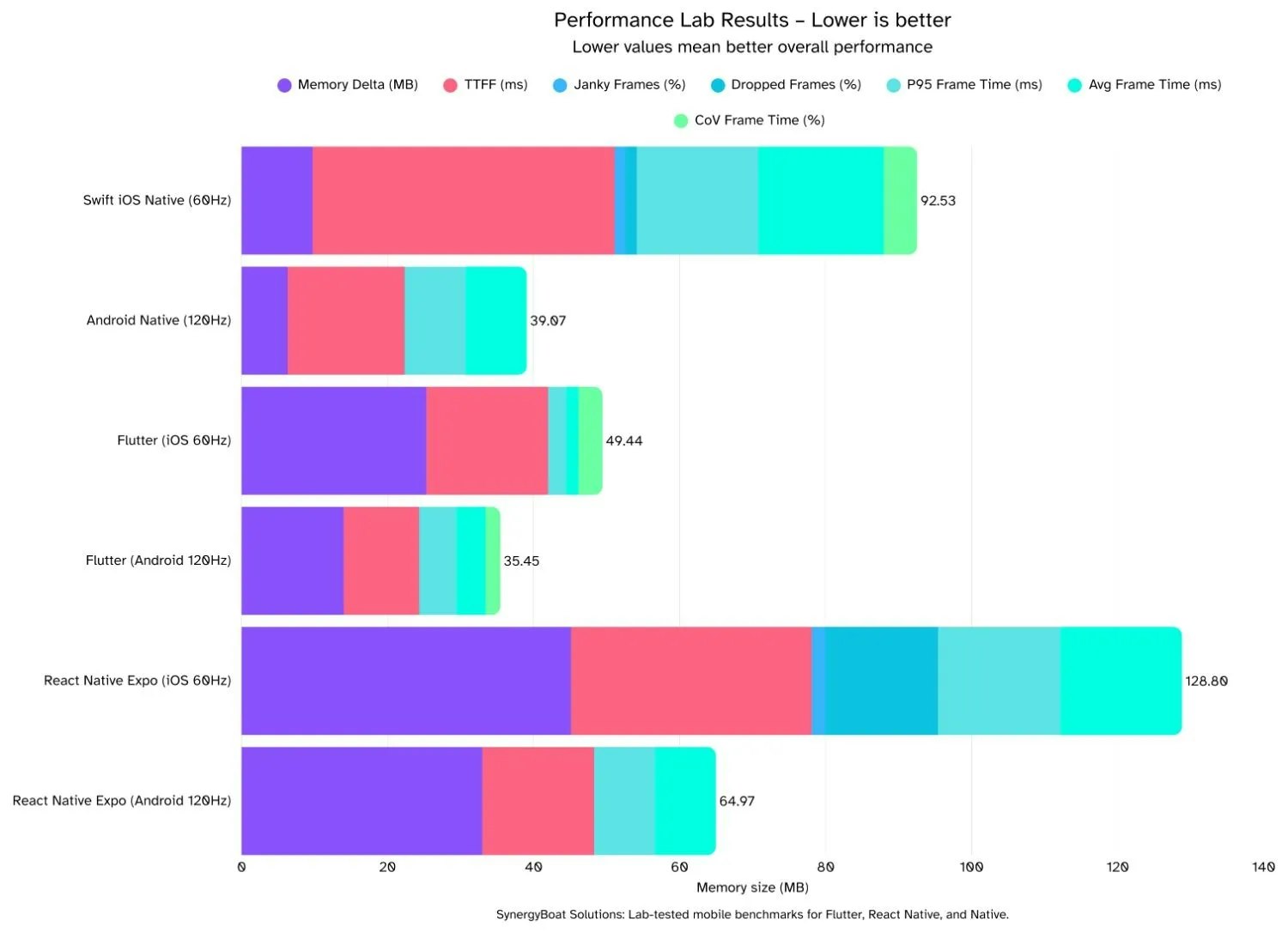

Overall Performance Composite is a stacked roll-up of key signals from the run: ΔMemory, TTFF, Jank%, DroppedFrames%, p95, Average Frame Time, and CoV. Lower is better. Use it as a quick triage to see which stack carries the smallest combined burden during the benchmarked scroll.

Composite Index Note: This bar combines multiple metrics on a consistent scale so you can compare frameworks at a glance. It is for prioritization, not for absolute claims.

Insights:

iOS (60 Hz)

Android (120 Hz)

How to read this: Lower bar equals less combined cost. Scan chunk colors to see which metric dominates. Then flip to the matching detailed chart to validate. Use this view to pick where engineering effort pays off fastest.

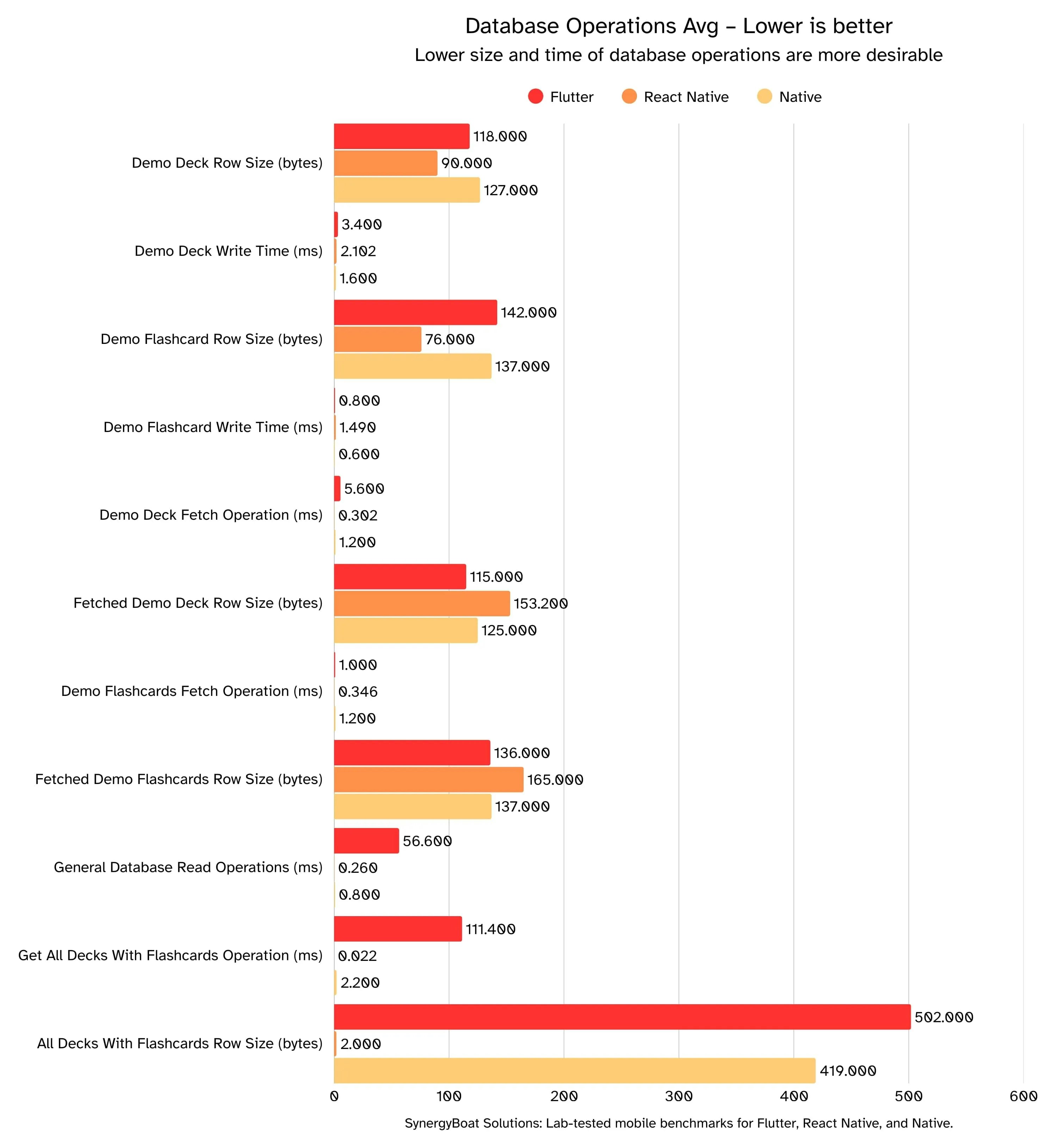

What these charts show?

Average times for common reads and writes, plus the average row sizes involved. Lower is better for time and size. This reflects how efficiently each stack moves data during deck and flashcard workflows.

Read DB timings alongside ΔMemory, TTFF, and your frame-time metrics to see whether storage work correlates with UI hitches. Always pair time with the payload size you are moving.

Practical rule of thumb

Keep simple writes under ~5 ms, simple fetches under ~10 ms, and aggregate queries under ~20 ms on a modern device. If averages creep above those ranges, batch operations, index the hot paths, and trim payloads.

DB Measurement Note: These numbers are app-level timings around database calls, not OS-level I/O traces.

Implementation Note: We use an ORM on Flutter and Native, so there is framework overhead on those stacks. React Native (Expo) uses expo-sqlite directly, so there is no ORM layer in RN. This difference can influence absolute timings. Interpret small gaps with that in mind.

Insights:

Flutter (with ORM)

React Native (direct SQLite, no ORM)

Native (both with ORM)

Takeaways

Flutter

React Native

Native (Swift/Kotlin)

If the averages look equal, pick the stack with more spare room and smaller spikes. That’s the one that stays smooth when real users do messy things.

Benchmarks are numbers. Strategy is about what you do with them. The lesson from our study is simple:

Don’t pick a framework because it wins on averages. Pick the one that fits your product’s lifecycle, team DNA, and performance tolerance.

The broader insight is that runtime tails, not averages, define user experience. Optimize for P95 latency, dropped frames, and memory growth, that’s where frustration or delight lives.

Tool choice is not permanent. Flutter, React Native, and Native can co-exist in your portfolio. The right strategy is knowing when to double down, when to hybridize, and when to pivot.

Ship smoother, faster, smarter.

Choose your boost:

Reply with your app type, current framework, target devices, and biggest performance pain, and we will map a quick win and a path to excellent user experience.

Email us at ahoy@synergyboat.com or visit Synergyboat.com

Software Engineer

Utkarsh is a mid-level Engineer with Strong experience in networking and server side technologies